Domino Data Science Blog

Dr J Rogel-Salazar

Dr Jesus Rogel-Salazar is a Research Associate in the Photonics Group in the Department of Physics at Imperial College London. He obtained his PhD in quantum atom optics at Imperial College in the group of Professor Geoff New and in collaboration with the Bose-Einstein Condensation Group in Oxford with Professor Keith Burnett. After completion of his doctorate in 2003, he took a posdoc in the Centre for Cold Matter at Imperial and moved on to the Department of Mathematics in the Applied Analysis and Computation Group with Professor Jeff Cash.

Diffusion Models – More Than Adding Noise

Go to your favourite social media outlet and use the search functionality to look for DALL-E. You can take a look at this link to see some examples in Twitter. Scroll a bit up and down, and you will see some images that, at first sight, may be very recognisable. Depending on the scenes depicted, if you pay a bit more attention you may see that in some cases something is not quite right with the images. At best there may be a bit (or a lot) of distortion, and in some other cases the scene is totally wacky. No, the artist did not intend to include that distortion or wackiness, and for that matter it is quite likely the artist is not even human. After all, DALL-E is a computer model, called so as a portmanteau of the beloved Pixar robot Wall-E and the surrealist artist Salvador Dalí.

By Dr J Rogel-Salazar12 min read

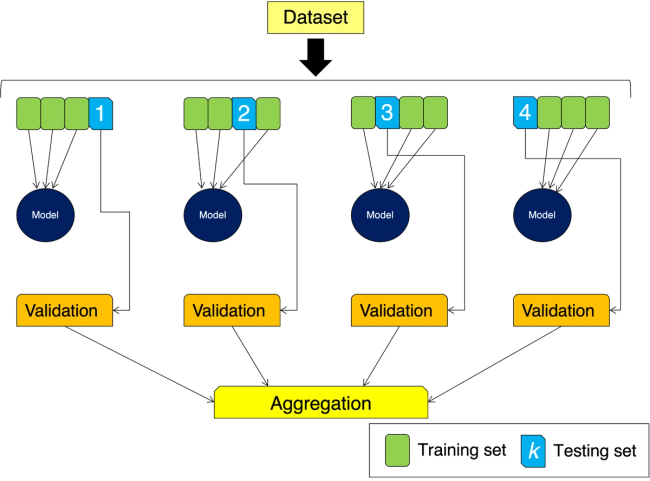

Building Robust Models with Cross-Validation in Python

So, you have a machine learning model! Congratulations! Now what? Well, as I always remind colleagues, there is no such thing as a perfect model, only good enough ones. With that in mind, the natural thing to do is to ensure that the machine learning model you have trained is robust and can generalise well to unseen data. On the one hand, we need to ensure that our model is not under- or overfitting, and on the other one, we need to optimise any hyperparameters present in our model. In order words, we are interested in model validation and selection.

By Dr J Rogel-Salazar16 min read

Transformers - Self-Attention to the rescue

If the mention of "Transformers" brings to mind the adventures of autonomous robots in disguise you are probably, like me, a child of the 80s: Playing with Cybertronians who blend in as trucks, planes and even microcasette recorders or dinosaurs. As much as I would like to talk about that kind of transformers, the subject of this blog post is about the transformers proposed by Vaswani and team in the 2017 paper titled "Attention is all you need" . We will be covering what transformers are and how the idea of self-attention works. This will help us understand why transformers are taking over the world of machine learning and doing so not in disguise.

By Dr J Rogel-Salazar14 min read

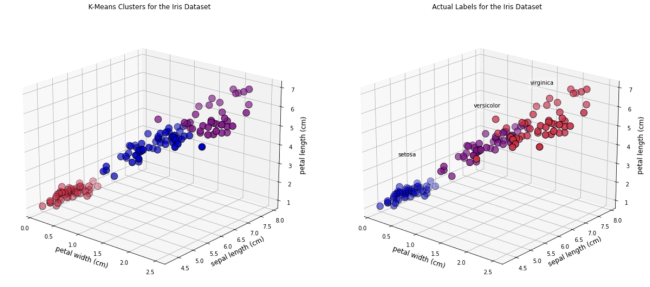

Getting started with k-means clustering in Python

Imagine you are an accomplished marketeer establishing a new campaign for a product and want to find appropriate segments to target, or you are lawyer interested in grouping together different documents depending on their content, or you are analysing credit card transactions to identify similar patterns. In all those cases, and many more, data science can be used to help clustering your data. Clustering analysis is an important area of unsupervised learning that helps us group data together. We have discussed in this blog the difference between supervised and unsupervised learning in the past. As a reminder, we use unsupervised learning when labelled data is not available for our purposes but we want to explore common features in the data. In the examples above, as a marketeer we may find common demographic characteristics in our target audience, or as a lawyer we establish different common themes in the documents in question or, as a fraud analyst we establish common transactions that may highlight outliers in someone’s account.

By Dr J Rogel-Salazar13 min read

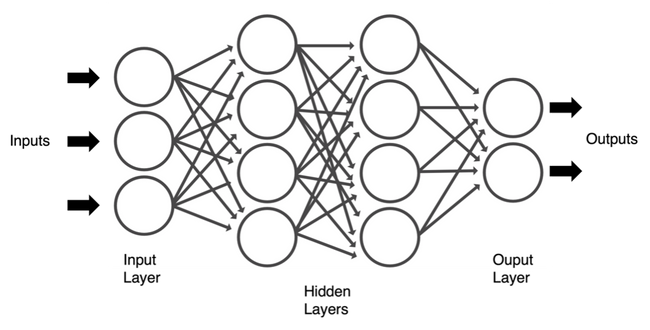

Tensorflow, PyTorch or Keras for Deep Learning

Machine learning provides us with ways to create data-powered systems that learn and enhance themselves, without being specifically programmed for the task at hand. As machine learning algorithms go, there is one class that has captured the imagination of many of us: deep learning. Surely you have heard of many fantastic applications where deep learning is being employed. For example, take the auto industry, where self-driving cars are powered by convolutional neural networks, or look at how recurrent neural networks are used for language translation and understanding. It is also worth mentioning the many different applications of neural networks in medical image recognition.

By Dr J Rogel-Salazar13 min read

Powering Up Machine Learning with GPUs

Whether you are a machine learning enthusiast, or a ninja data scientist training models for all sorts of applications, you may have heard of the need to use graphical processing units (GPUs), to squeeze the best performance when training and scaling your models. This may be summarized by saying that training tasks based on small datasets that take a few minutes to complete on a CPU may take hours, days, or even weeks when moving to larger datasets if a GPU is not used. GPU acceleration is a topic we have previously addressed; see "Faster Deep Learning with GPUs and Theano".

By Dr J Rogel-Salazar14 min read

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.