Data Science in the Senses

Paco Nathan2019-05-10 | 8 min read

By Paco Nathan, Managing Partner, Derwen, Inc. on May 10, 2019 in

This blog was originally posted to KDnuggets here.

Our evening event at Rev conference this year will be Data Science in the Senses. That’s on Thursday, May 23 in NYC.

Register today for Rev and use this code for a discount: PACORev25 — see the end of this article for more details about the conference.

The Data Science in the Senses event will showcase amazing projects now making waves through the realms of AI in art—Botnik, folk RNN, Ben Snell, Josh Urban Davis, and more— projects that leverage data and machine learning for sensory experiences. Here’s a sneak peek at the experience:

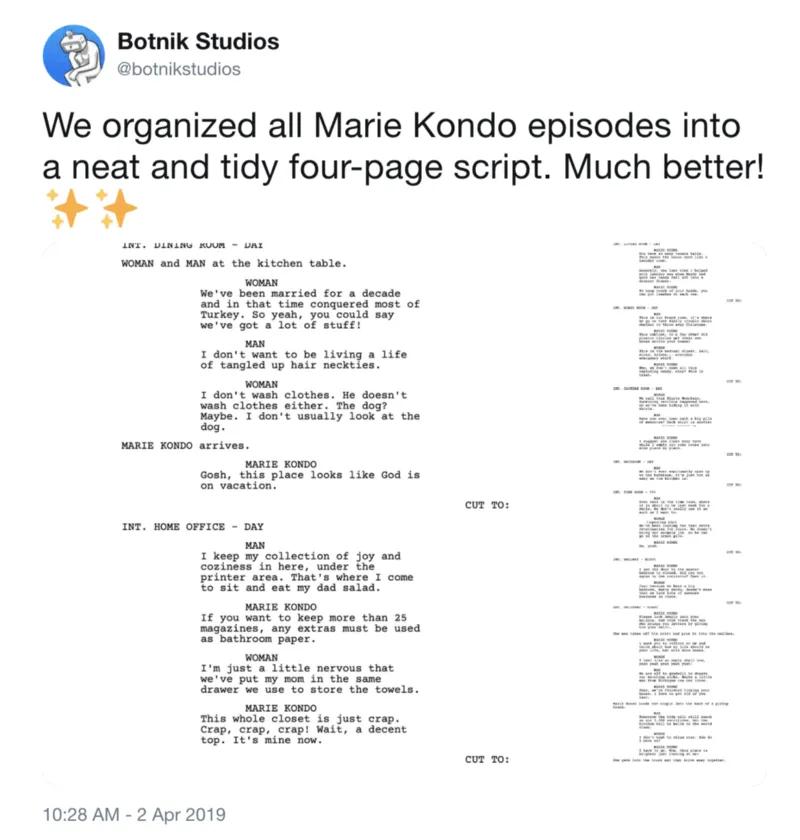

Botnik is a community of writers, artists, and software developers who use machines to create interesting things. Check out their gallery online for examples, such as new twangy tunes generated by a model trained on 70,000+ existing country songs. Or romance novels generated by deep learning that’s been trained on over 20,000 Harlequin Romance titles. Who can deny such irresistible guilty pleasures as Midwife Cowpoke or The Man for Dr. Husband, courtesy of AI? Or (my favorite) this super-helpful tidying up of all the messy Marie Kondo’s episodes into one condensed script:

Now that definitely sparks joy. Their works are the result of big data, some text analytics, probability, and ample doses of irony, which Botnik performs as a live show, sometimes even as karaoke-style AI-augmented antics. At our Data Science in the Senses event, they’ll be giving a talk to explain the magic and performing live!

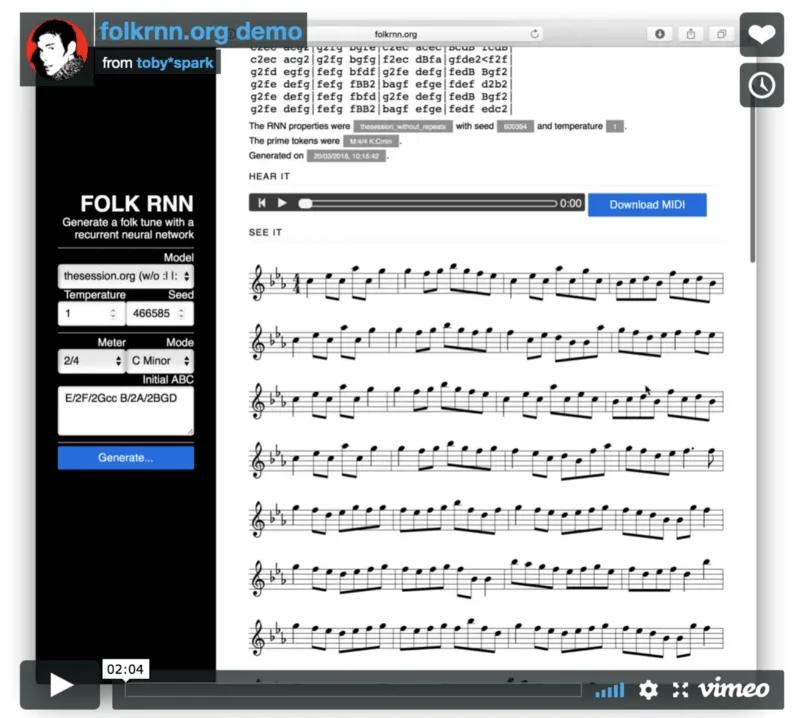

folk RNN, based at Kingston University in London, uses deep learning to learn Celtic folk music from scores, and then generate new sheet music for people to play. Check out their open source GitHub repo. The O’Reilly AI London 2018 conference features folk RNN in the spine-tingling AI Music: Deep Learning for Music Making event at St. James’s Sussex Gardens. At Data Science in the Senses, folk RNN will screen a short video which introduces their work, plus they’ve provided a playlist for the event’s DJ. They’ll also have a booth with headphones and monitor to demo the https://folkrnn.org/ software where Rev attendees can generate their own songs.

Rumor has it that Botnik and folk RNN have been collaborating based on an introduction made through Rev conference. One uses AI to generate new music scores, while the other writes lyrics. We’re eager to see what follows!

Ben Snell, based in NYC, is an AI artist who investigates materialities and ecologies of computation. For one fascinating example, Ben has trained his computer to become a sculptor: spending months sifting through museum collections, studying the masters far and wide, honing its techniques, attempting to recreate from memory each and every classical sculpture it had ever glimpsed. Affectionately named Dio (after the Greek deity Dionysus), the machine learning application has translated its studies into a visual vocabulary of component parts, from which it builds up even more complex shapes and expressions. As a capstone project after all that work, Ben posed a simple challenge for Dio: “Close your eyes, then dream of a new form, something that’s never existed before.”

“Traces of the computer’s invisible processing power live on in its bodily form and in the bits of matter containing its thoughts and memories.” Come see Ben’s exhibits at our Data Science in the Senses event and experience the materiality of Dio’s dreams made manifest, one of the first sculptures created by AI.

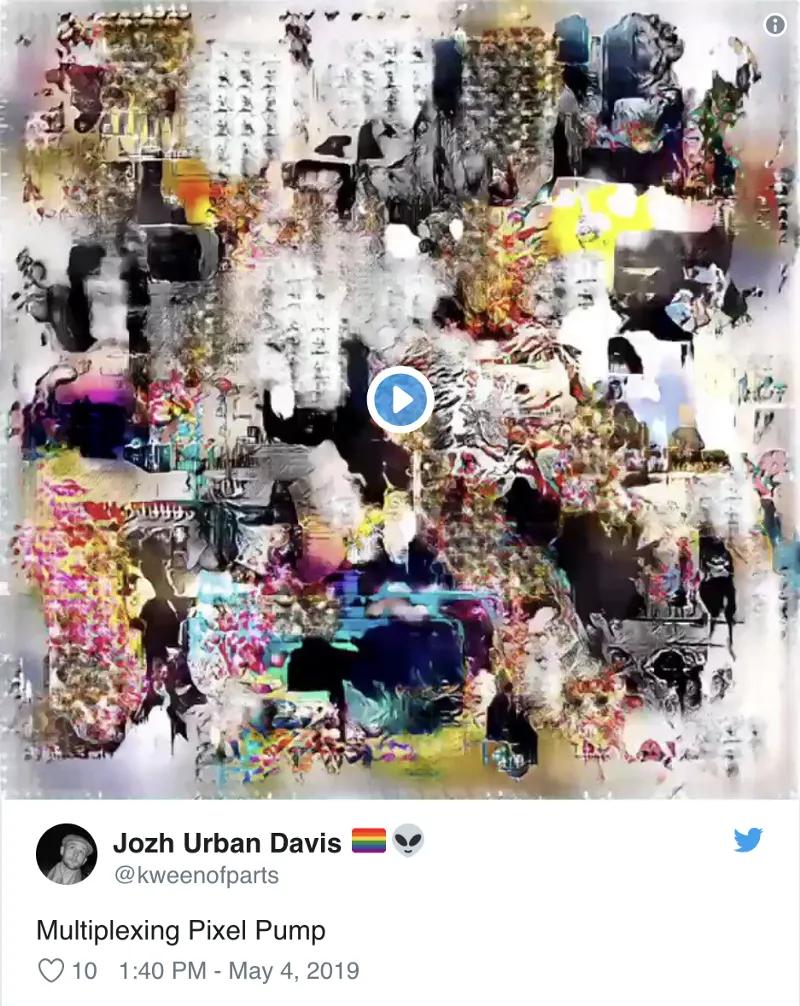

Josh Urban Davis is a Dartmouth researcher in Human Computer Interaction and weird futurist who blends machine learning with advanced user interfaces to generate disturbing yet pleasing works of art. Check out the spellbinding gallery on Instagram, Synapstraction: Brain-Computer Interaction for Tangible Abstract Painting, and the un-unseeable Multiplexing Pixel Pump:

Josh will present a brief talk about creating animations, and have an installation featuring a composite of his GAN-generated animations to loop throughout the evening.

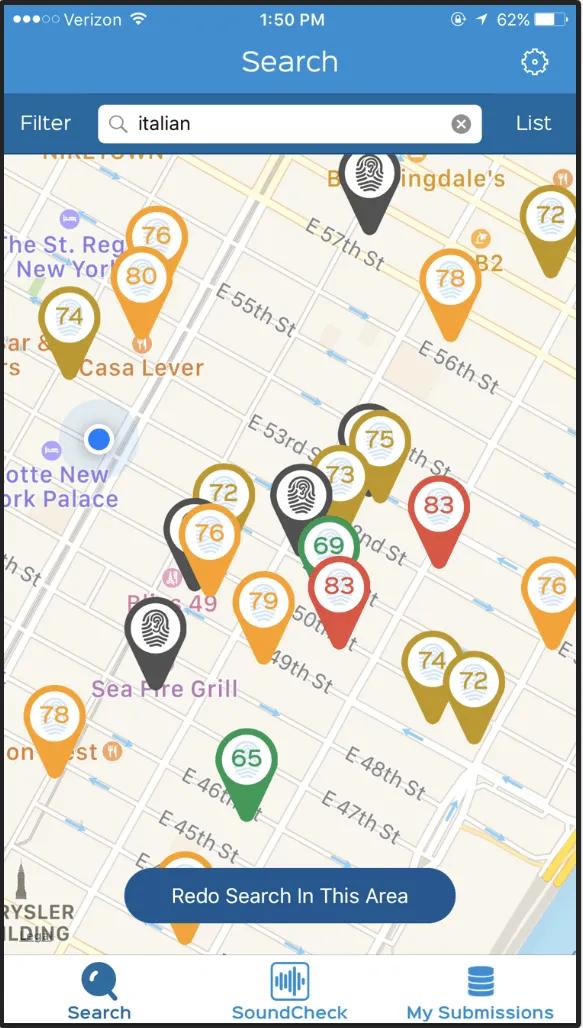

SoundPrint — The last time that you were out at a restaurant or bar, could you barely even hear the others at your table? Did you have to shout, just to talk with each other? Yeah, me too. We can all thank the infamous “top chef” Mario Batali for establishing the deafening trend in US restaurants.

In response, SoundPrint got busy with a smartphone app to measure and analyze sound levels. They’ve built a network of SoundPrint “ambassadors” (including yours truly) quietly collecting decibel measurements of restaurants, bars, coffeehouses, and other public spaces worldwide — collected into a geospatial databases of sound estimates, “Like Yelp!, but for noise.” Their crowdsourced solution is great to check whether a venue is suitable for conversation and safe for hearing health, great for finding some quieter spot for a date or business meeting.

More recently, SoundPrint has been expanding their database work to include hearing health analysis, analysis of office and hospital settings to ensure compliance with noise guidelines, predictive scoring, acoustic forensics, and more. SoundPrint will be presenting at Data Science in the Senses, plus they’ll have a booth for attendees to learn about using the app and their dataset. BTW, SoundPrint is looking for data science teams to partner with — for those who have interesting use cases for the data. Catch them at Rev!

Analytical Flavor Systems — the makers of Gastrograph AI — uses machine learning to create services for food and beverage producers, helping them model, understand, and optimize flavor / aroma / texture for target consumer demographics. Their innovations quantify product flavor profiles and their raw ingredient components.

At the Data Science in the Senses event, Analytical Flavor Systems will have a booth where attendees can use an app to set up preferences based on what they taste, then formulate a personalized beverage. Make that a double!

Kineviz develops visual analytics software and solutions leveraging their GraphXR platform, for applications ranging across law enforcement, medical research, and knowledge management. GraphXR uses Neo4j to analyze links, properties, time series, and spatial data within a unified, animated context. At the Data Science in the Senses event, Kineviz will present their analysis and visualizations for Game of Thrones data. Srsly, could Tormund Giantsbane be Lyanna Mormont’s father? #GameofThrones

I’m super excited about Rev conference, our Data Science in the Senses evening event as a social mixer after the session talks, our excellent line-up of speakers presenting about leadership in data science, and what enterprise teams can learn from each other. Plus, we’ll have Nobel laureate Daniel Kahneman as our headline keynote, presenting “The Psychology of Intuitive Judgment and Choices” — exploring in detail about machine learning vis-a-via modern behavioral economics and long-held assumptions about the supposed role of human rationality in decision making.

Register today for Rev and use this code for a discount: PACORev25.

See you there!

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.