Today, one of the biggest challenges facing data scientists is taking models from development to production in an efficient and reproducible way. In this way, machine learning (ML) pipelines seek to identify the steps involved in this process. Once the steps are defined, they can be automated and orchestrated, streamlining the data science lifecycle.

In a nutshell, machine learning operations (MLOps) pipelines abstract each part of the ML workflow into actionable modules, which in turn allow the data science lifecycle to be automated. This helps develop, train, validate, and deploy models to production faster.

In this article, you'll learn about the structure of a typical ML pipeline as well as best practices and tips to consider when designing a best-in-class MLOps pipeline.

What Does an MLOps Pipeline Look Like

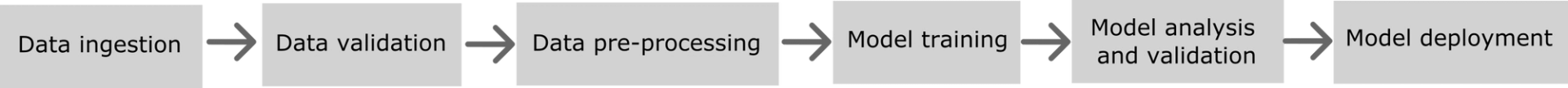

In much of the machine learning literature currently available, as well as in the documentation of many data science platforms, you'll find ML pipeline diagrams like the following:

As you can see, there are six well-defined stages in a conventional ML pipeline:

- Data collection: Data collection consists of the processes related to data ingestion, including transporting raw data from different sources to its storage location, generally a database. From there, it can be accessed by team members for accuracy and quality validations.

- Data validation: In this stage, the data is validated for accuracy and quality.

- Data pre-processing: The previously validated raw data is prepared so that it can be processed by different ML tools. This includes converting the raw data into features that are suitable for use.

- Model training: This is where data scientists experiment by training and tuning multiple models before selecting the best model.

- Model analysis and validation: During this stage, the performance metrics of the trained models are validated before it's deployed in production.

- Model deployment: This stage consists of registering a model and having it ready for use by the business.

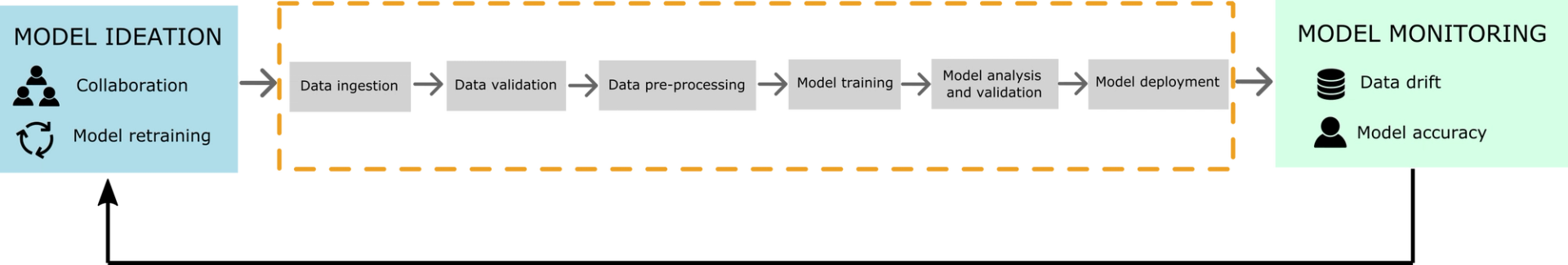

There's a different way of thinking about MLOps pipelines that take into account the entire ML model lifecycle, from the moment the project is initiated until it's monitored in projection (including eventual retraining):

In the rest of this article, you'll learn about what you need to take into account when designing a best-in-class MLOps pipeline.

Best Practices for Designing a Best-in-Class MLOps Pipeline

The point of designing a best-in-class MLOps pipeline is to minimize friction across the model lifecycle. This can be done through automation; however, it's easier said than done.

As you saw in the previous section, the MLOps pipeline consists of many moving parts, so achieving synergy between them is complex, let alone automating it. Fortunately, the tips and best practices listed subsequently can help your team in this effort.

Divide and Rule

Because designing an MLOps pipeline can be a daunting task, it's considered a best practice to break it down into smaller, more manageable steps. The key here is to define each process thoroughly in order to facilitate its optimization and subsequent automation.

For example, in the development stage, one process in the pipeline prone to optimization is the provisioning of one or more ML frameworks or processes for your team to perform experiments. Even though it seems simple at first glance, this step has its own challenges, such as having dependency conflicts between software versions, and determining the resources required by the tools. For this reason, another useful tip is to limit the scope of each pipeline step to avoid making it too complex.

A reference for what the ideal result would look like can be found in Domino's Automated Environment Creation, which allows scientists to use a variety of distributed computing frameworks and IDEs that are automatically provisioned in Docker images and linked to your files and data sources.

Keep It Simple When Orchestrating Your MLOps Pipeline

Once you have well-defined steps, you should proceed to orchestrate the MLOps pipeline. In other words, establish how the data will be engineered and what direction and sequences it will follow as it moves from one step to the next. A good tip is to avoid unnecessary complexity and use ML orchestration tools that facilitate this task. However, keep in mind that the learning curve for most ML orchestration systems tends to be steep, so the time savings mentioned assume your team has already overcome this hurdle.

If you're looking for the most efficient solution to this paradox, the answer lies in Domino's data science platform, the only one that provides end-to-end orchestration of the data science lifecycle.

Don't Forget to Keep Track of All the Changes

Designing an MLOps pipeline consumes considerable resources. For this reason, it's a best practice to keep track of all the changes you make to the pipeline. This facilitates reusing or repurposing pipelines, which in turn speeds up the development of new models.

In addition, a similar principle applies to your experiments. Keeping track of experiments and models allows for easy reproduction. One of the Domino Experiment Manager's most valuable features is its ability to keep track of all results as well as the code, data, Docker image, parameters, and others that are required to reproduce them. Moreover, Domino's Experiment Manager versions automatically allow your team to tag and comment on each run to better keep track of the results and determine which configuration scores the best.

Automate Without Losing Sight of the Big Picture

At the end of the day, the goal of MLOps pipelines is to speed up the delivery of actionable results that enable better business decisions. In other words, when designing a MLOps pipeline, your team may have to make concessions in terms of features in order to facilitate automation and orchestration.

Finding the ideal balance between flexibility and complexity is not easy since many moving parts are involved, and some design decisions can affect the pipeline as a whole. Because of this, it doesn't help that data scientists' core functional skills are not designed to address IT infrastructure's technical aspects and limitations. Take for example putting the trained model into a format suitable for consumption. Containerization of a model is not the same as creating an API ready to be ingested. DevOps engineers, not data scientists, handle these tasks typically, so a change in model format can be a major inconvenience.

Fortunately, the Domino platform allows data scientists to self-publish models as APIs, Docker containers, or applications as required—all from the convenience of its powerful UI and without the need to write a single line of code.

Avoid Vendor Lock-In

At this point, a timely piece of advice is to avoid at all costs the temptation to use proprietary technologies in the hope of enjoying a variety of features from a single vendor without the need for orchestration on your part. This is a mistake that your organization will end up paying for.

It's a best practice to avoid vendor lock-in, and for this, nothing is better than Domino—the only open tool and language-agnostic MLOps platform that is future-proof.

Remember that Model Monitoring Is Not Optional

You will find that some pipelines overlook the importance of monitoring models once they are put into production. This has to do with the false belief that the ML pipeline ends with model deployment, a serious error that goes against best practices, as monitoring is vital to ensure that model predictions remain accurate. Moreover, since models degrade over time, the need to retrain them is unavoidable.

It's recommended to keep in mind that the MLOps pipeline is cyclical from the beginning; once a model is deployed, its performance must be constantly monitored to determine the right moment to jump back to the initial stage.

At Domino, the importance of monitoring is highlighted, and that's why their platform automatically monitors for data and model quality drift to alert when production data is no longer compatible with the data used for training, so data scientists can retrain and republish these models promptly.

Don't Underestimate the Power of Collaboration

One final tip when designing your MLOps pipeline is not to underestimate the power of collaboration. As previously mentioned, Domino believes that a best-in-class MLOps pipeline is one that spans the entire data science lifecycle. What sense does it make that different teams work, sometimes without knowing it, on similar problems? Wouldn't it be more efficient to compound the knowledge and experience of all data scientists?

Domino offers a centralized platform with self-service access to tools and infrastructure where data scientists can discover, reuse, reproduce work, and ultimately save time and resources.

Damaso Sanoja is a mechanical engineer with a passion for cars and computers. He's written for both industries for more than two decades.

RELATED TAGS

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.