Subject archive for "model-management," page 7

Justified Algorithmic Forgiveness?

Last week, Paco Nathan referenced Julia Angwin’s recent Strata keynote that covered algorithmic bias. This Domino Data Science Field Note dives a bit deeper into some of the publicly available research regarding algorithmic accountability and forgiveness, specifically around a proprietary black box model used to predict the risk of recidivism, or whether someone will “relapse into criminal behavior”.

By Domino14 min read

Themes and Conferences per Pacoid, Episode 2

Paco Nathan's column covers themes of data science for accountability, reinforcement learning challenges assumptions, as well as surprises within AI and Economics.

By Paco Nathan30 min read

Trust in LIME: Yes, No, Maybe So?

In this Domino Data Science Field Note, we briefly discuss an algorithm and framework for generating explanations, LIME (Local Interpretable Model-Agnostic Explanations), that may help data scientists, machine learning researchers, and engineers decide whether to trust the predictions of any classifier in any model, including seemingly “black box” models.

By Ann Spencer7 min read

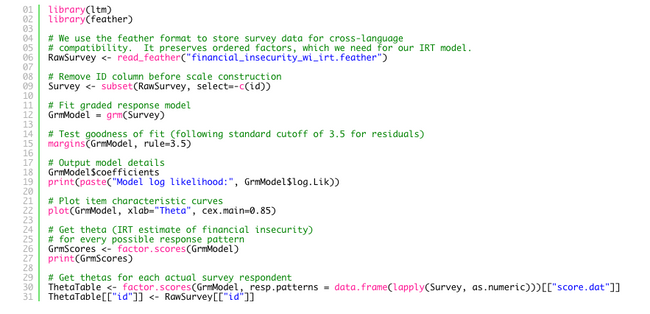

Item Response Theory in R for Survey Analysis

In this guest blog post, Derrick Higgins covers item response theory (IRT) and how data scientists can apply it within a project. As a complement to the guest blog post, there is also a demo within Domino.

By Derrick Higgins9 min read

Themes and Conferences per Pacoid, Episode 1

Introduction: New Monthly Series!

By Paco Nathan11 min read

Make Machine Learning Interpretability More Rigorous

This Domino Data Science Field Note covers a proposed definition of machine learning interpretability, why interpretability matters, and the arguments for considering a rigorous evaluation of interpretability. Insights are drawn from Finale Doshi-Velez’s talk, “A Roadmap for the Rigorous Science of Interpretability” as well as the paper, “Towards a Rigorous Science of Interpretable Machine Learning”. The paper was co-authored by Finale Doshi-Velez and Been Kim. Finale Doshi-Velez is an assistant professor of computer science at Harvard Paulson School of Engineering and Been Kim is a research scientist at Google Brain.

By Ann Spencer8 min read

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.