Beyond Spark: Dask and Ray as Multi-node Accelerated Compute Frameworks

Apache Spark has been the incumbent distributed compute framework for the past 10+ years. But the overhead and complexity of Spark has been eclipsed by new frameworks like Dask and Ray.

We'll discuss the history of the three, their intended use-cases, their strengths, and their weaknesses. From individual trade-offs, we pose the question of how to select the right framework based on the available infrastructure, data volumes, workload complexity, etc.

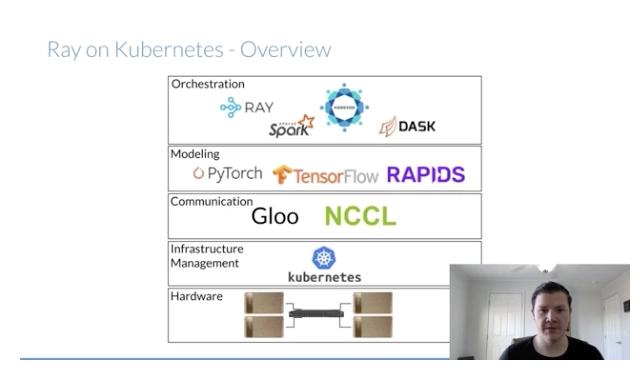

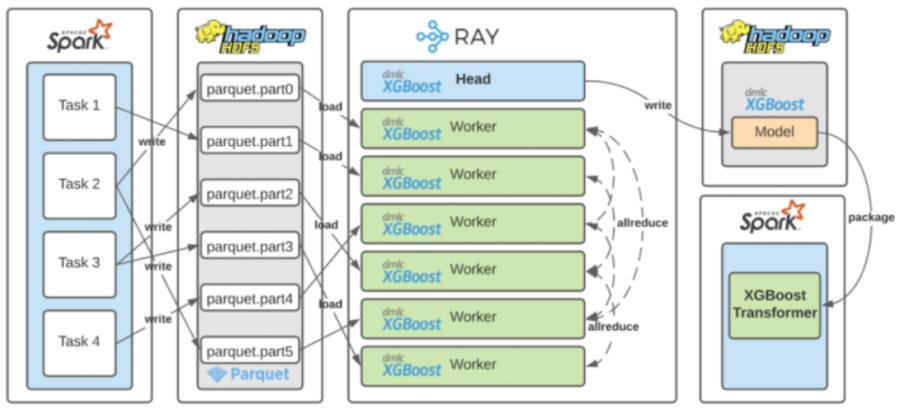

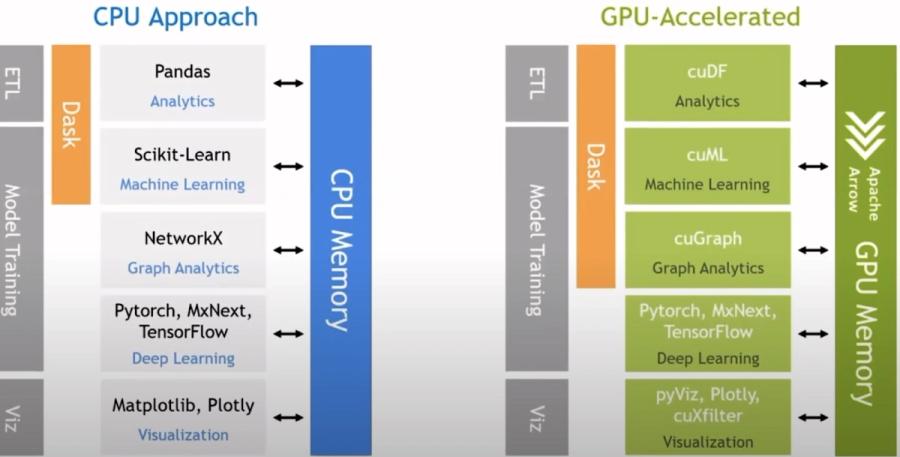

Finally, we’ll explore their capabilities in the context of GPU-accelerated computing, presenting an integrated solution that enables data scientists to easily provision a Spark/Ray/Dask cluster and access it through an integrated development environment.

Speaker: Nikolay Manchev - Principal Data Scientist for EMEA, Domino Data Lab

Related Resources

Webinar

Run complex AI training workloads using on-demand GPU-accelerated Spark/RAPIDs clusters