Subject archive for "llm"

Full LLM power and 60% cheaper: Model Cascade with Mixture of Thought on Domino

What if I told you that you could save 60% or more off of the cost of your LLM API spending without compromising on accuracy? Surprisingly, now you can.

By Subir Manuskhani and Yuval Zukerman 7 min read

Accelerate AI with AI Hub

AI Hub is a repository of curated, templated projects from Domino and its AI ecosystem partners.

By Yuval Zukerman7 min read

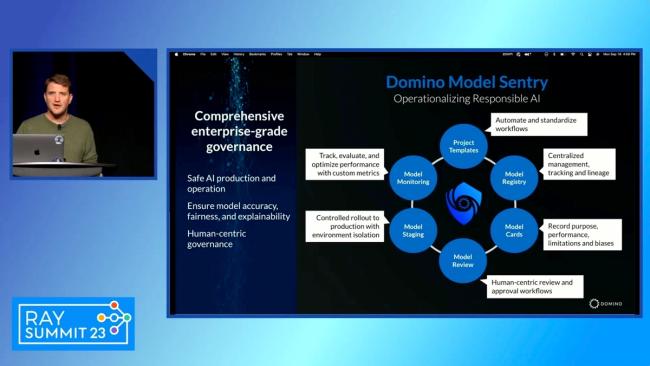

Bridging MLOps and LLMOps: Ray Summit Talk

Explore the Generative AI enterprise integration challenges, the nuances of LLMOps, and how Domino's platform aids in responsible AI deployment.

2 min read

Breaking Generative AI Barriers with Efficient Fine-Tuning Techniques

This blog post explores the challenges of fine-tuning large language models (LLMs) and introduces resource-optimized and parameter-efficient techniques such as quantization, LoRA, and Zero Redundancy Optimization (ZeRO). By fine-tuning Falcon-7b, Falcon-40b, and GPTJ-6b, we demonstrate how these techniques offer improved performance, cost-effectiveness, and resource optimization in LLM fine-tuning. The blog post also discusses the future of fine-tuning and its potential for unlocking new possibilities in enterprise AI applications.

By Subir Mansukhani9 min read

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.