AI HUB

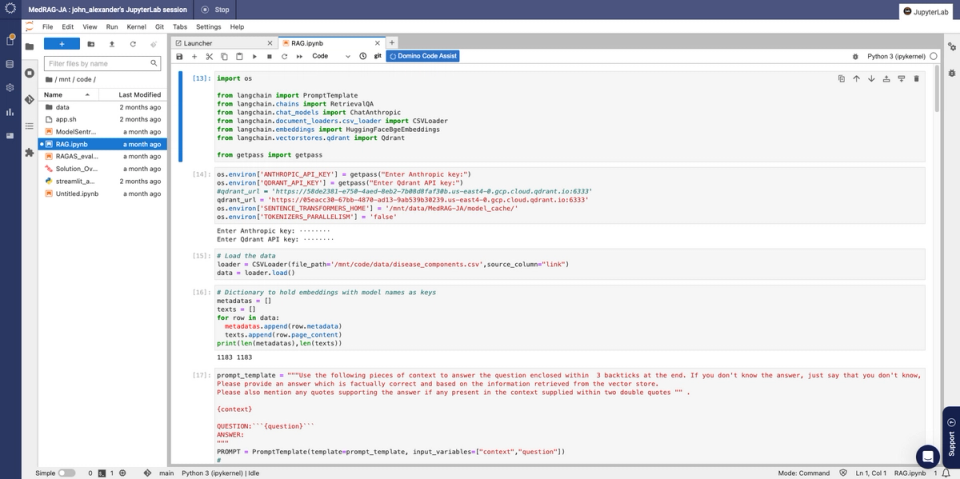

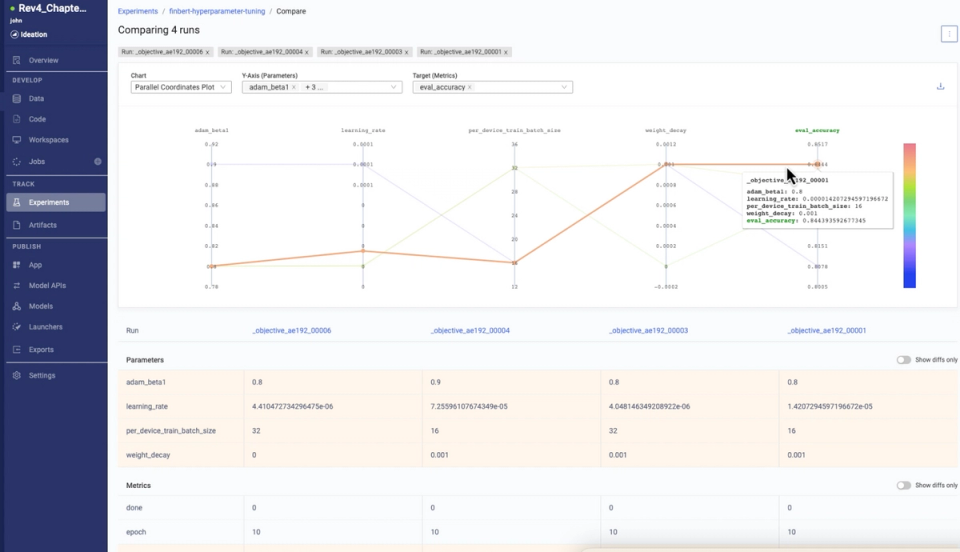

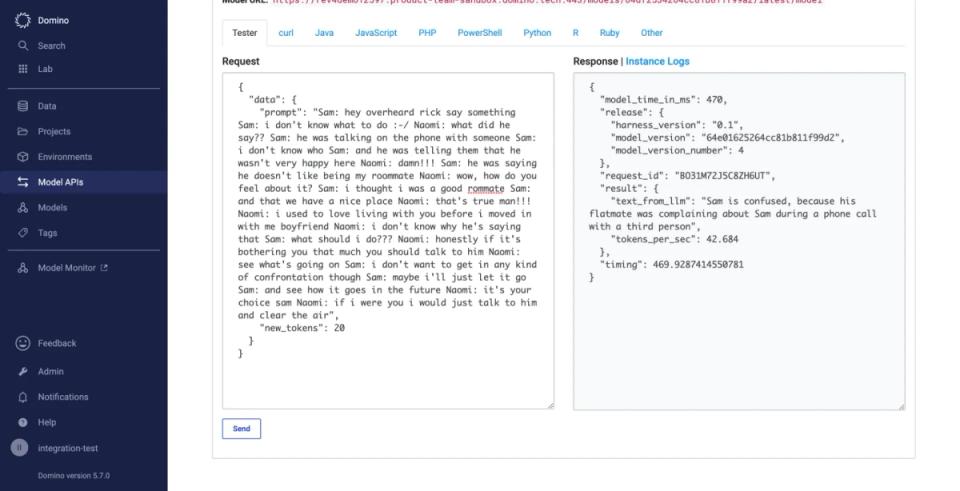

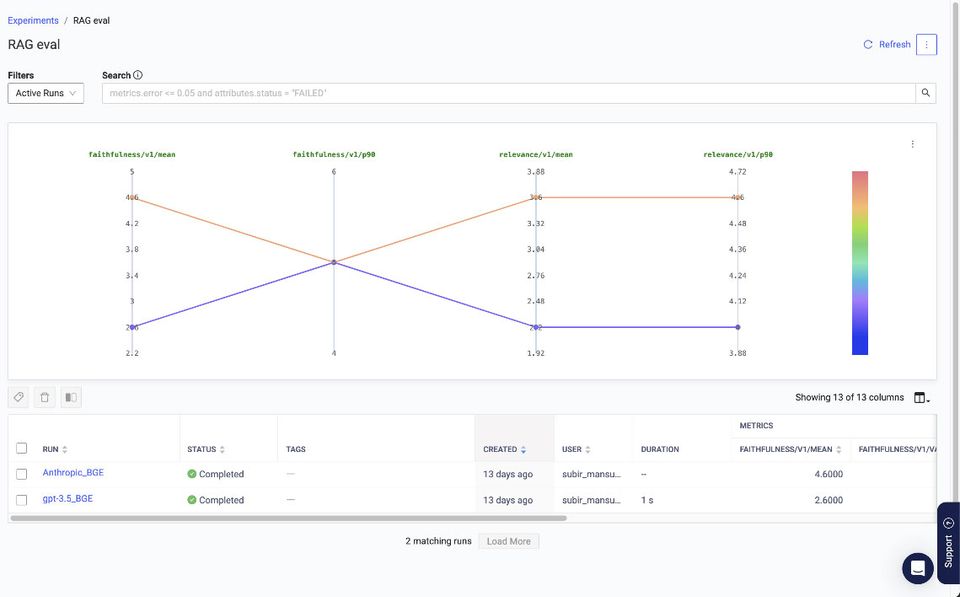

Pre-built Accelerators for GenAI

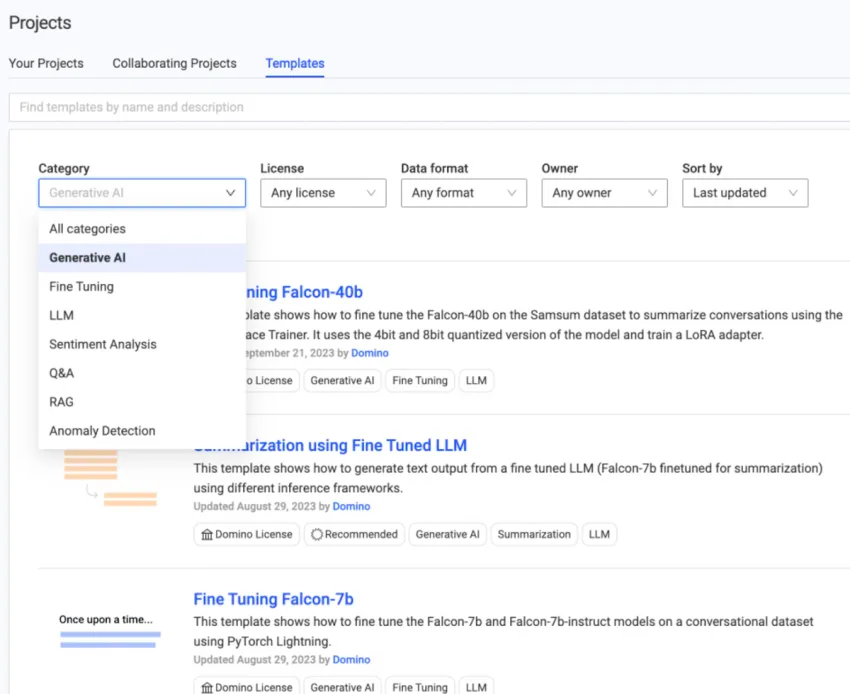

The Domino AI Hub is a collection of pre-built AI projects that help teams jumpstart GenAI development for use cases like RAG, fine-tuning, and more. Templates are fully customizable and shareable for promoting GenAI best practices and guardrails. AI Hub templates from Domino and partners like AWS, NVIDIA, Hugging Face, and others enable you to stay ahead of rapid generative AI innovations.

![Prompt security and governance [clean]](https://cdn.sanity.io/images/kuana2sp/production-main/1736008e370d862d3ba1dcaa317ca26889e2cc8e-1117x539.svg?w=960&fit=max&auto=format)