MLOps on AWS

As machine learning and AI increasingly become key drivers of organizational performance, enterprises are realizing the need to more efficiently move machine learning models from development through to production and management.

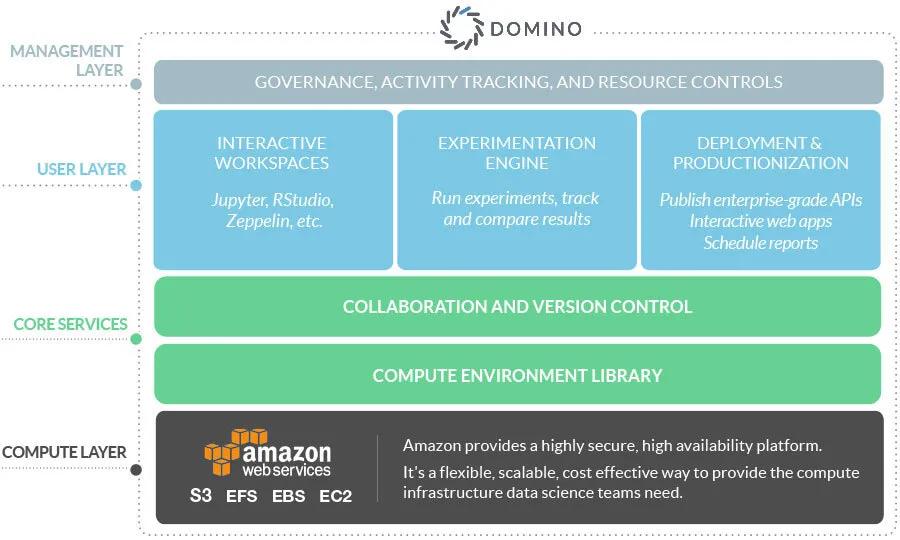

Domino’s code-driven and notebook-centric environment, combined with AWS’s expertise in large-scale enterprise model operations, makes for a natural technical pairing when optimizing the productionization of machine learning.

Two Powerful Options for Model Deployment

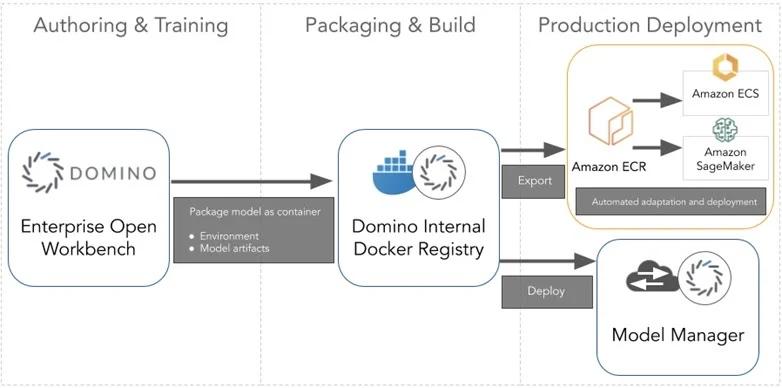

You can deploy and host models in Domino on AWS infrastructure, which provides an easy, self-service method for API endpoint deployment. Deploying models within Domino provides insight into the full model lineage down to the exact version of all software used to create the function that calls the model. Domino can provide an overview of all assets and link those to individuals, teams, and projects.

But some customers prefer to use Amazon SageMaker for its highly-scalable and low-latency hosting functionality. To support these situations, Domino can use a set of APIs to export a model in a format compatible with Amazon SageMaker. All of the packages, code, and more are included to deploy the model directly in SageMaker.

Learn More About Cloud Data Science

Unleash data science

See why over 20% of the Fortune 100 has chosen Domino