This article covers how to detect data drift for models that ingest image data as their input in order to prevent their silent degradation in production.

Preventing Silent Model Degradation in Production

In the real word, data is recorded by different systems and is constantly changing. How data is collected, treated and transformed impacts the data. Change may occur with the introduction of noise due to mechanical wear and tear to physical systems or if a fundamental shift in the underlying generative process occurs (e.g., a change in the interest rate by a regulator, a natural calamity, the introduction of a new competitor in the market or a change in a business strategy/process, etc.). Such changes have ramifications for the accuracy of predictions and necessitate the need to check that the assumptions made during the development of models still hold good when models are in production.

In the context of machine learning, we consider data drift1 to be the change in model input data that leads to a degradation of model performance. In the remainder of this article, we shall cover how to detect data drift for models that ingest image data as their input in order to prevent their silent degradation in production.

Detecting Image Drift

Given the proliferation of interest in deep learning in the enterprise, models that ingest non traditional forms of data such as unstructured text and images into production are on the rise. In such cases, methods from statistical process control and operations research that rely primarily on numerical data are hard to adopt and necessitates a new approach to monitoring models in production. This article explores an approach that can be used to detect data drift for models that classify/score image data.

Model Monitoring: The Approach

Our approach does not make any assumptions about the model that has been deployed, but it requires access to the training data used to build the model and the prediction data used for scoring. The intuitive approach to detect data drift for image data is to build a machine learned representation of the training dataset and to use this representation to reconstruct data that is being presented to the model. If the reconstruction error is high then the data being presented to the model is different from what it was trained on. The sequence of operations is as follows:

- Learn a low dimensional representation of the training dataset (encoder)

- Reconstruct a validation dataset using the representation from Step 1 (decoder) and store reconstruction loss as baseline reconstruction loss

- Reconstruct batch of data that is being sent for predictions using encoder and decoder in Steps 1 and 2; store reconstruction loss

If reconstruction loss of dataset being used for predictions exceeds the baseline reconstruction loss by a predefined threshold set off an alert.

Image Data Drift Detection in Action

The steps below show the relevant code snippets that cover the approach that has been detailed above.

We now dive into the relevant code snippets to detect drift on image data.

Step 1: Install the required dependencies for the project by adding the following to your Dockerfile.

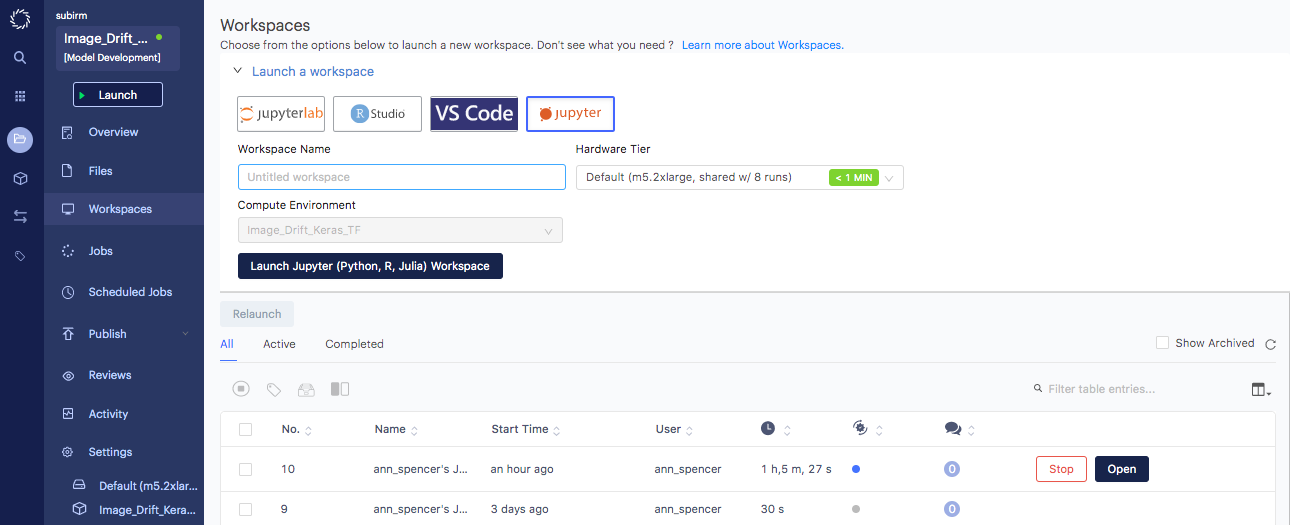

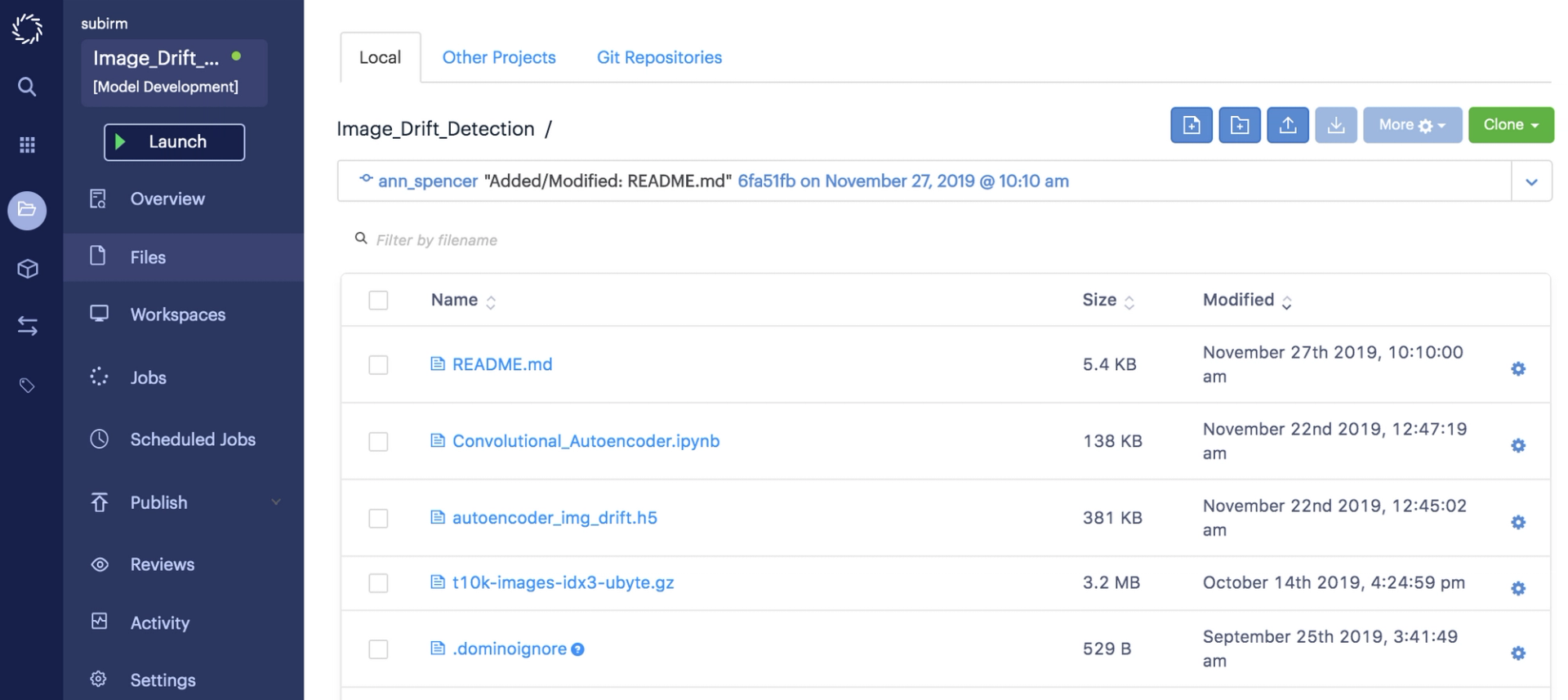

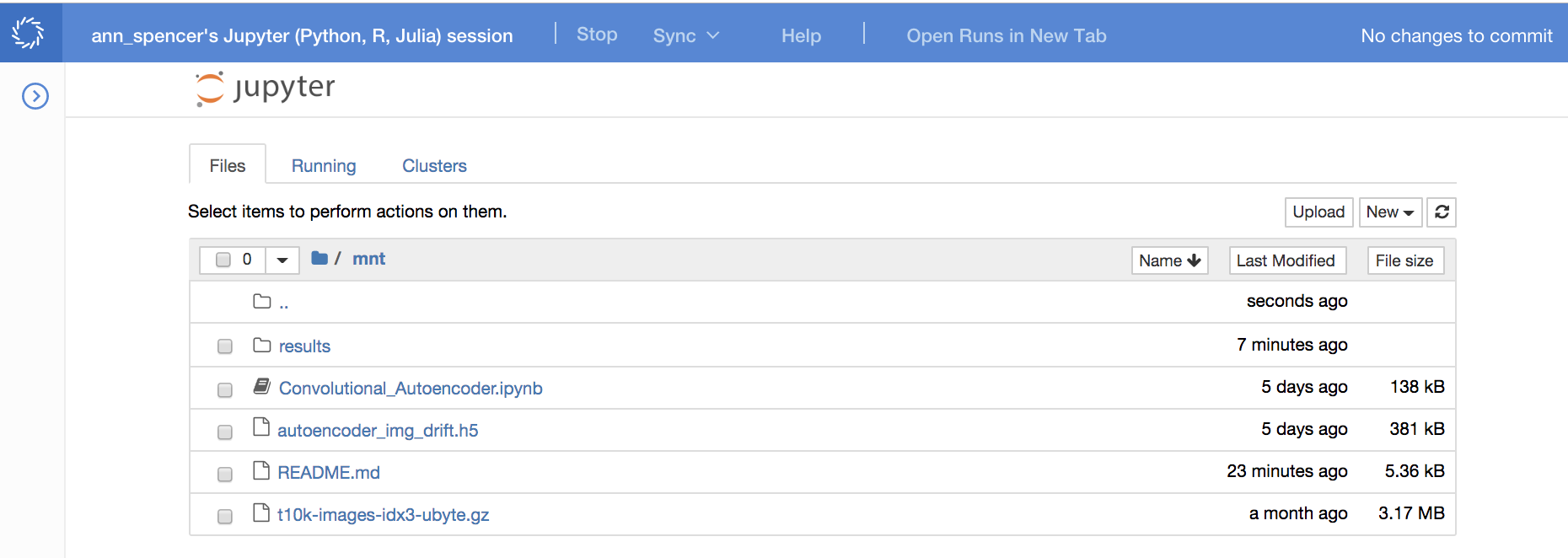

RUN pip install numpy==1.13.1RUN pip install tensorflow==1.2.1RUN pip install Keras==2.0.6Step 2: Start a Jupyter notebook workspace and load the required libraries.

#Load libraries

from keras.layers import Input, Dense, Conv2D, MaxPool2D, UpSampling2D, MaxPooling2D

from keras.models import Model

from keras import backend as K

from keras import regularizers

from keras.callbacks import ModelCheckpoint

from keras.datasets import mnist

import numpy as np

import matplotlib.pyplot as pltStep 3:Specify the architecture for the autoencoder.

#Specify the architecture for the auto encoder

input_img = Input(shape=(28, 28, 1))

# Encoder

x = Conv2D(32, (3, 3), activation='relu', padding='same')(input_img)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(32, (3, 3), activation='relu', padding='same')(x)

encoded = MaxPooling2D((2, 2), padding='same')(x)

# Decoder

x = Conv2D(32, (3, 3), activation='relu', padding='same')(encoded)

x = UpSampling2D((2, 2))(x)

x = Conv2D(32, (3, 3), activation='relu', padding='same')(x)

x = UpSampling2D((2, 2))(x)

decoded = Conv2D(1, (3, 3), activation='sigmoid', padding='same')(x)

autoencoder = Model(input_img, decoded)

autoencoder.compile(optimizer='adadelta', loss='binary_crossentropy')Step 4:Generate the test, train and noisy MNIST data sets.

# Generate the train and test sets

(x_train, _), (x_test, _) = mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_train = np.reshape(x_train, (len(x_train), 28, 28, 1))

x_test = np.reshape(x_test, (len(x_test), 28, 28, 1))

# Generate some noisy data

noise_factor = 0.5

x_train_noisy = x_train + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=x_train.shape)

x_test_noisy = x_test + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=x_test.shape)

x_train_noisy = np.clip(x_train_noisy, 0., 1.)

x_test_noisy = np.clip(x_test_noisy, 0., 1.)Step 5:Train the convolutional autoencoder.

# Checkpoint the model to retrieve it later

cp = ModelCheckpoint(filepath="autoencoder_img_drift.h5",

save_best_only=True,

verbose=0)

# Store training history

history = autoencoder.fit(x_train_noisy, x_train,epochs=10,

batch_size=128,

shuffle=True,

validation_data=(x_test_noisy, x_test),

callbacks = [cp, plot_losses]).historyThe validation loss we obtained is 0.1011.

Step 6:Get the reconstruction loss for the noisy MNIST data set.

x_test_noisy_loss = autoencoder.evaluate(x_test_noisy,decoded_imgs)

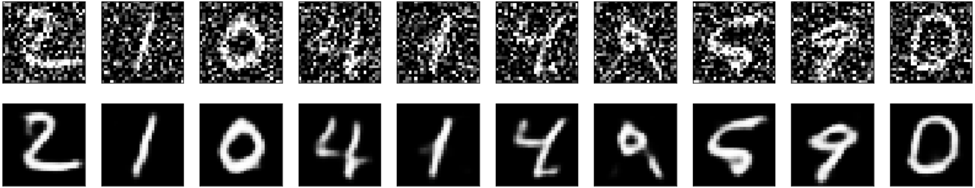

Sample of noisy input data (top row) and reconstructed images (bottom row)

The reconstruction error that we obtained on the noisy MNIST data set is 0.1024. Compared with the baseline reconstruction error on the validation dataset this is a 1.3% increase in the error.

Step 7: Get the reconstruction loss for the nonMNIST dataset; compare with validation loss for MNIST dataset and noisy MNIST.

non_mnist_data_loss = autoencoder.evaluate(non_mnist_data,non_mnist_pred)

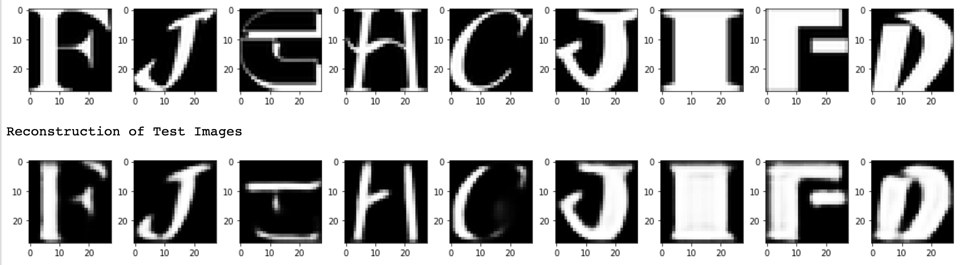

Sample of nonMNIST data (top row) and its corresponding reconstruction (bottom row)

The reconstruction error that we obtained on the nonMNIST data set is 0.1458. Compared with the baseline reconstruction error on the validation dataset, this is a 44.2% increase in the error.

From this example, it is clear that there is a spike in the reconstruction loss when the convolution encoder is made to reconstruct a dataset that is different than the one used to train the model. The next step as a result of this detection is to either retrain the model with new data or to investigate what led to a change in the data.

Conclusion

In this example, we saw that a convolutional autoencoder is capable of quantifying differences in image datasets on the basis of a reconstruction error. Using this approach of comparing reconstruction errors, we can detect changes in the input that is being presented to image classifiers. However, improvements can be made to this approach in the future. For one, while we used a trial-and-error approach to settle on the architecture of the convolutional autoencoder, by using a neural architecture search algorithm we can obtain a much more accurate autoencoder model. Another improvement could be using a data augmentation strategy on the training dataset to make the autoencoder more robust to noisy data and variances in rotations and translations of images.

If you would like to know more about how you can use Domino to monitor your production models, you can watch our Webinar on “Monitoring Models at Scale”. It will cover continuous monitoring for data drift and model quality.

References

- Gama, Joao; Zliobait, Indre; Bifet, Albert; Pechenizkiy, Mykola; and Bouchachia, Abdelhamid. “A Survey on Concept Drift Adaptation” ACM Computing Survey Volume 1, Article 1 (January 2013)

- LeCun, Yann; Corinna Cortes; Christopher J.C. Burges. "The MNIST Database of handwritten digits".

- notMNIST dataset

Subir Mansukhani is Staff Software Engineer - 2 at Domino Data Lab. Previously he was the Co-Founder and Chief Data Scientist at Intuition.AI.

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.