We benchmarked AWS’s new G3 instances for deep learning tasks and found they significantly outperform the older P2 instances. The new G3 instances are now available for use in Domino.

AWS recently announced a new GPU-powered EC2 Instances type, the G3. These new G3 instances are now available on Domino, so you can use them to run your data science workloads with one click and zero setup.

GPU-powered EC2 instances in AWS

According to AWS, the G3 instances are built for graphics intensive applications like 3D visualizations whereas P2 instances are built for general purpose GPU computing like machine learning and computational finance. We wanted to know how the G3 instances performed against the P2 instances. Could the pricier G3 instances be worth the money for some deep learning applications? We will review the specifications on AWS’s GPU-powered instances, benchmark the instances against a convolutional neural network and a recurrent neural network, and see how the performance stacks up against their costs.

We started by comparing the specifications between GPUs that power the G3 instances and the P2 instances. The G3 instances are powered by NVIDIA Tesla M60 GPUs. The M60 GPUs have a much newer architecture than the K80s or the K520 GPUs, which power the AWS P2 and G2 instances, respectively, so you would expect better performance. But, GPU performance in neural networks is a combination of many factors:

- A large number of CUDA cores will increase the parallelism of your computations.

- A large memory size on the GPU will let you have larger the batch sizes when you train your neural network.

- A high memory bandwidth will allow you to quickly communicate with your CPU. If you have the highest performing GPU in the world and can’t get the information on the GPU back to the CPU quickly, you will end up paying a huge cost for each batch and slowing down the training process.

- The clock speed is the number of calculations per second on one of the CUDA cores, so a high clock speed is better.

Below are the specifications for the GPUs that power the different EC2 instances on AWS.

G2 | NVIDIA GRID K520 | 2 x Kepler | 3072 | 8 GB of GDDR5 | 320 GB/sec | 800 MHz |

|---|---|---|---|---|---|---|

P2 | NVIDIA Tesla K80 | 2 x Kepler | 4992 | 24 GB of GDDR5 | 480GB/sec | 875 MHz |

G3 | NVIDIA Tesla M60 | 2 x Maxwell | 4096 | 16 GB of GDDR5 | 320 GB/sec | 930 MHz |

We took the new G3 instances out for a test drive using a compute environment already set up in Domino, pre-loaded with CUDA drivers and Tensorflow. We iterated by changing the hardware tier to use the G3, P2, and G2 instances while selecting the GPU tools compute environment to run our code.

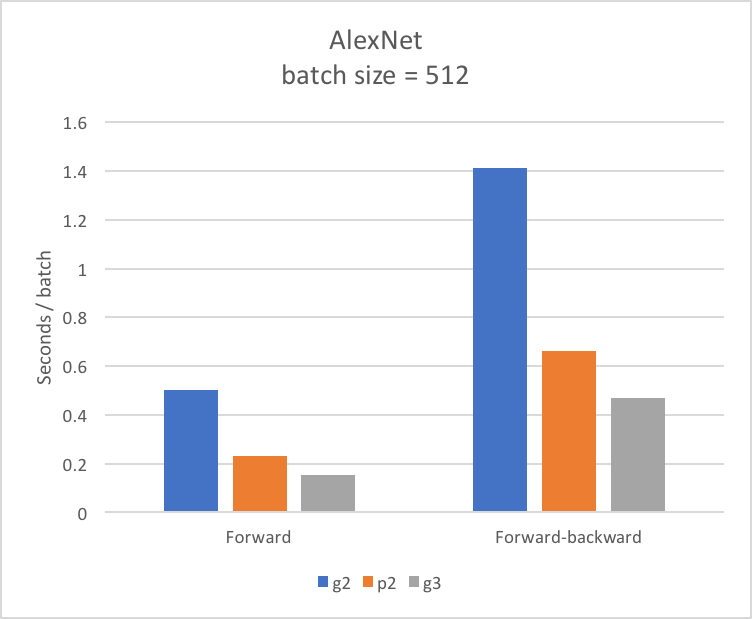

Convolutional Neural Net Benchmark

We used the AlexNet benchmark in Tensorflow to compare the performance across all 3 instances. Despite the lower number of CUDA cores, smaller memory size, and smaller memory bandwidth, the G3 instances still performed better than their P2 counterparts.

The new G3 instances are clearly faster in training AlexNet, a standard benchmark for convolutional neural networks. Is it worth it in price?

We looked at the relative increase in performance and the relative increase in price, and the answer seems like an emphatic “Yes.” The G3 instances are worth the higher costs in the long run, especially if you’re going to be training some large convolutional neural nets.

G2 | 1.41 | $0.65 | 1 | 1 | 1 |

|---|---|---|---|---|---|

P2 | 0.66 | $0.90 | 2.14 | 1.38 | 1.54 |

G3 | 0.47 | $1.14 | 3.02 | 1.75 | 1.72 |

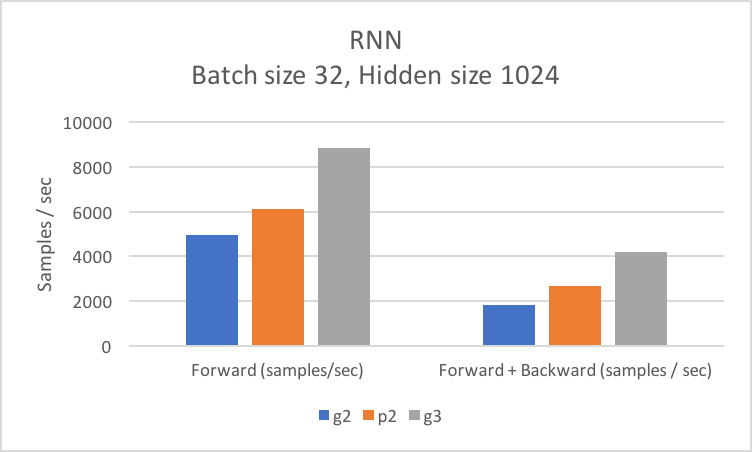

Recurrent Neural Net Benchmark

We also wanted to look at recurrent neural networks in Tensorflow and how they performed across the 3 available GPU instances using RNN, a popular benchmark.

G2 | 1834 | 0.65 | 1 | 1 | 1 |

|---|---|---|---|---|---|

P2 | 2675 | 0.9 | 1.46 | 1.38 | 1.05 |

G3 | 4177 | 1.14 | 2.28 | 1.75 | 1.3 |

Again, the G3 instances are clearly faster at training the RNN than the G2 and P2 instances, and the increased costs for all of the newer instances are worth it… barely. So, if you’re going to be running a big RNN model, using the G3 instances will most likely save you a lot of money down the road, according to the RNN benchmark.

Subscribe to the Domino Newsletter

Receive data science tips and tutorials from leading Data Science leaders, right to your inbox.

By submitting this form you agree to receive communications from Domino related to products and services in accordance with Domino's privacy policy and may opt-out at anytime.